© 2021. All rights reserved.

This page will serve as a sort of navigation index to all the various parts involved in getting a Word Prediction app up and running. The intended audience is someone looking to get into NLP (natural language processing), has an understanding of R or at least enough programming experience to read through snippets or R code, look around for online resources, and get a general understanding of what’s going on.

That being said I’ll keep any technical jargon limited and ideally anyone with an interest in word prediction will be able to take something away from the pages ahead.

The aim is to start from raw, English: News, Blog and Twitter data and produce an NLP data product (interactive online word prediction app) hosted at ShinyApps .

Throughout the project I found that R on its own isn’t enough to produce an word predictor with. You’ll find quite a few CSS, jquery, HTML based solutions.

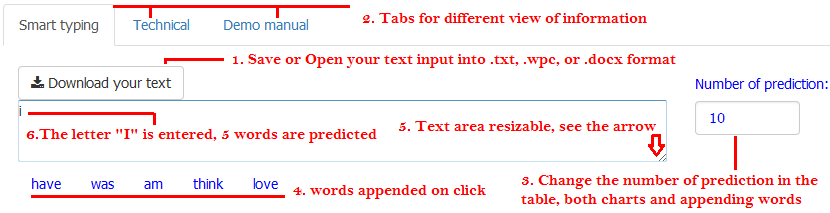

This application predicts the next word given a set of words, using a customized “Stupid Backoff” algorithm. The basic idea is to compute the probability of a word given some previous set of words. This application

The formula of the N-grams probability algorithm is expressed as follows:

\[S(w_i | w_{i-k+1}^{i-1}) =\begin{cases}count(w_{i-k+1}^{i})/count(w_{i-k+1}^{i-1}), & \text{if } count(w_{i-k+1}^{i})>0 \\\\[4ex]0.4S(w_i | w_{i-k+2}^{i-1}), & \text{otherwise}\end{cases}\] \[S(w_i|w_{i-1}) = \begin{cases}count(w_{i-1},w_i)/count(w_{i-1}), \text{ } \text{if } count(w_{i-1})>0, \text{ }\text{and if not } \\\\[4ex]\frac{count(w_{i-1},w_i)}{count(w_{i-1})}, \text{ } \text{if } count(w_{i-1}) \text{ }\text{has an approximate string matching, otherwise}\end{cases}\]\(S(w_i)=\frac{count(w_i)}{N}\)

S emphasizes that these are not probabilities but scores

More information for my NLP-Project can be found here: Building a Word Predictor. If you are looking for the word prediction shiny app I made for the Capstone Project, you can find it here: A ShinyApp Word Predictor